Overview

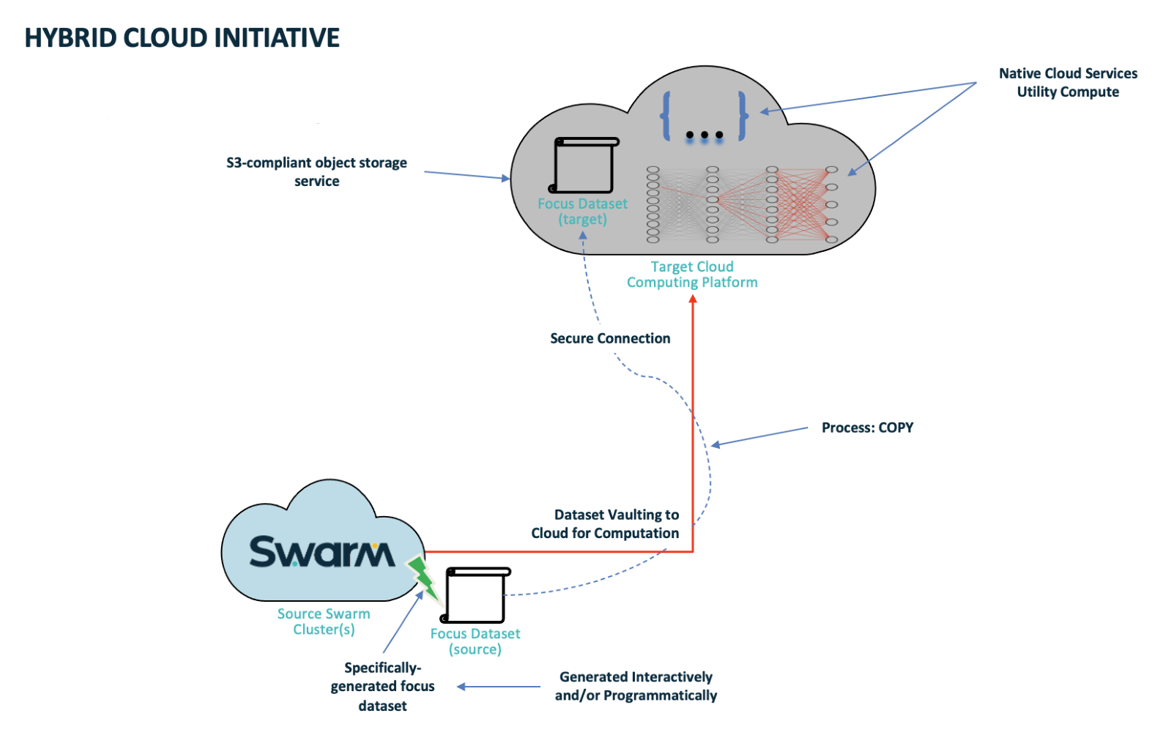

Swarm Hybrid Cloud provides the capability to copy objects from Swarm to a target S3 cloud storage destination. Native cloud services and/or applications running on utility computing can work with data directly from the target cloud storage. Future releases provide additional capabilities.

Capabilities

The Content UI provides access to the capabilities.

It functions with the AWS S3 object storage service and supports general S3 buckets as the target cloud storage.

It uses the provided target bucket with an S3-valid name, endpoint, access key, and secret key.

It uses a selected dataset to copy to the cloud (known as the ‘focus dataset’). This can be a bucket or dynamic set of criteria from a collection of the Swarm source.

The focus dataset remains in the native format in the target cloud storage, and users/applications/services read the focus dataset directly in the target cloud storage without any additional steps.

The integrity of all objects in the focus dataset is preserved as it is copied to the target cloud storage. Status is provided with the result of each copy from the focus dataset for reference and verification.

The object metadata can be preserved, modified, or removed in part depending on the target storage system.

Objects are transferred securely.

The initial release enables the focus dataset transfer to be initiated on a manual basis.

Info

Each object from the focus dataset is copied to the cloud and does not move to the cloud. They remain within the Swarm namespace as the authoritative copy and remain searchable in the Swarm namespace after the copy.

The “folder” path of each object is preserved.

The payload size and hash integrity are checked at the target cloud storage and reported in the log file.

The target bucket may exist already. If not, create a target bucket using the provided credentials. Either way, target credentials need permission for bucket creation. This functionality may change in future releases.

Prerequisites

Docker Community Edition is installed and must be started on the Gateway server(s).

See https://docs.docker.com/config/daemon/systemd/?msclkid=36e68349c0d411ecb438f130e19228bc for starting a Docker, and https://docs.docker.com/engine/install/linux-postinstall/#configure-docker-to-start-on-boot for setting up Docker to start on the server restart.A bucket with focus dataset to copy is created on Swarm UI.

A bucket is created on the target cloud storage service.

Both token ID and S3 secret key are generated on the target cloud storage service.

Usage

Swarm Hybrid Cloud feature is accessible to clients via Content UI. Clients need to select a specific dataset to copy to the cloud, which can be either a collection or a bucket. Provide the target bucket details, e.g., endpoint, access key & secret key. Results for each object are provided in the source bucket as a status file. The focus dataset is defined shortly after the job is triggered and is not redefined during execution. Use the generated dictionary and log files to review the job.

Workflow

Content UI

Hybrid Cloud helps in replicating the focus dataset, therefore, the client needs two environments:

Swarm UI

Target cloud storage service

Copy all the data from the source location (Swarm) to the destination (client’s target cloud storage service). It is applied at the bucket level. The entire data residing in a bucket can be copied and then placed at the destination. This whole process of replicating data needs job creation at the source location.

Create a job at the bucket level to start copying the focus dataset to the target cloud storage service.

Irrespective of the focus dataset was copied successfully or not, there are two or more files created after the job submission:

Manifest File - Contains information such as total object count & size, endpoint, target bucket name, access key, and secret key of focus dataset copied.

Dictionary File(s) - Contains the list of all focus datasets copied. The dictionary file displays the name of each object along with the size in bytes.

Log File - Provides the current status of each object of the focus dataset copied, along with details from the final check. The log file is generated after the objects are queued up to copy and, is refreshed every two minutes. There are four potential statuses for each object:

Pending - An initial state where the object is queued up for the copy.

Failed - When an object is failed to copy to the target cloud storage service. Reasons include the target endpoint is not accessible, or any issue with an individual object, such as too large object name for S3. See the Gateway server log for failure details.

Copied - When an object is successfully copied.

Skipped - When an object is skipped. Reasons include the object already exists at the destination, the object does not exist at the source, or the object is marked for deletion and cannot be copied.

Each file is important and provides information about the focus dataset copied from the Swarm UI to the target cloud storage service. The format of these may change in future releases. The Manifest and Log files are overwritten if the same job name is used from a previous run, so save the files or use a different job name if this is not desired.

If required, renaming of a single or all files is possible after the copying has started. Any log file updates continue under the old name.

Replicating data to the destination

Refer to the following steps to replicate the focus dataset:

Navigate to the Swarm UI bucket or collection to copy.

Click Actions (three gear icons) and select Copy to S3. A modal presents a form, with required fields marked with asterisks (*) as shown in the example below:

Job Name - Provide a unique name for the job. The manifest and log files are overwritten if a job name is reused from a previous run.

End Point - A target service endpoint.

For AWS S3 targets - The format is shown in the screenshot above.

For Swarm targets - The value needs to be in the following format with HTTP or HTTPS as needed:

http://${DOMAIN}:${S3_PORT}

Region - The S3 region to use. Some S3 providers may not require region.

Bucket - Enter the target bucket name.

Access Key - An access key for the target bucket and must be generated within the target cloud storage service.

Secret Key - An S3 secret key. It is generated with the access key.

Click Begin S3 Copy. This button is enabled once all required text fields are filled.

The copy operation generates the earlier objects (manifest, dictionary object, and log). All objects use the given job name as a prefix but are appended with separate suffixes. The duration of the job depends on the size of the job (the count of objects and the total number of bytes to be transferred). Download and open the latest copy of the status log to monitor the status of the job.

Verify Docker is installed and started, and has been restarted after reboot if the request fails to submit.

Some errors may display after successful request initiation while the errors that do not display in the page details or status log file are detailed in the Gateway log. Verify the endpoint and access keys are correct and not expired.

.png?version=1&modificationDate=1643299918421&cacheVersion=1&api=v2)