...

The “folder” path of each object is preserved.

The payload size and hash integrity are checked at the target storage and reported in the log file.

The target bucket may exist already. If not, create a target bucket or container using the known credentials. Either way, target credentials need permission for bucket or container creation. This functionality may change in future releases.

Objects with Unicode in the name are supported.

Up to 50,000 objects are supported at a time.

Future releases will provide additional capabilities.

| Info |

|---|

Info Break up the task to run on a folder or subset of objects via strict collection search if a copy of over 50,000 objects is failing. |

Prerequisites

Gateway is version 7.10 or later. (7.8 supports minimal copy to S3 cloud functionality)

Content Portal User Interface is version 7.7 or later. (7.5 supports minimal copy to S3 cloud functionality)

Docker Community Edition is installed and must be started on the Gateway server(s).

See https://docs.docker.com/config/daemon/systemd/?msclkid=36e68349c0d411ecb438f130e19228bc for starting a Docker, and https://docs.docker.com/engine/install/linux-postinstall/#configure-docker-to-start-on-boot for setting up Docker to start on the server restart.A focus dataset is defined. This may be an entire bucket, a folder within a bucket, or a collection that is scoped to content within a single bucket.

A bucket or container is created on the remote cloud storage service.

Keys or credentials are available for remote cloud storage.

...

Refer to the following steps to replicate the focus dataset:

Navigate to the Swarm UI bucket or collection to copy.

Click Actions (three gears icon) and select either “Push to cloud” or “Pull from cloud” (formerly Copy to S3). Select S3 or Azure depending on the remote endpoint.

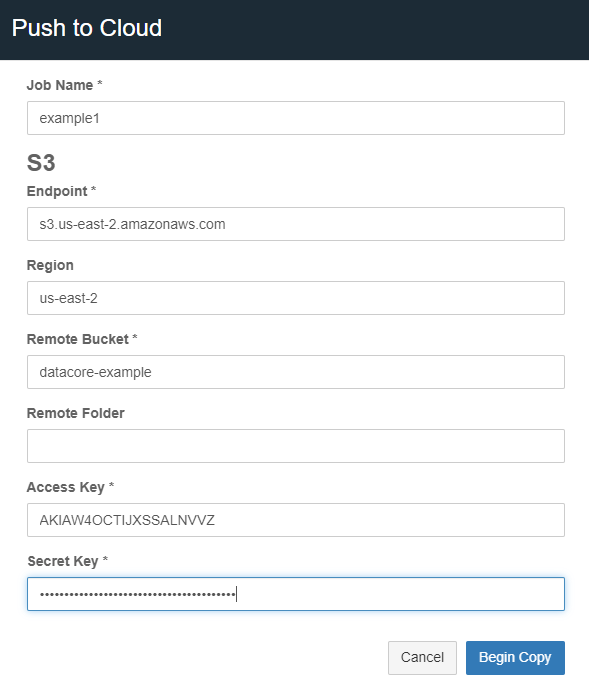

A modal presents a form, with required fields marked with asterisks (*) as shown in the example below:

...

Job Name - Provide a unique name for the job. The manifest and log files are overwritten if a job name is reused from a previous run.

Local Path - Provide the bucket name and any optional subfolders. Objects are copied directly to the bucket if the field is left blank. This is an option when pulling from the cloud.

Object Selection - An option when pulling from the cloud.

All in the remote path - Select this option to copy all the objects/files from the remote location.

Only objects matching the current collection/bucket list - Select this option as a filter to repatriate and update only the objects/files that already exist in the Swarm destination to the remote version.

S3

Endpoint - A remote service endpoint.

For AWS S3 endpoints - The format is shown in the screenshot above.

For Swarm endpoints - The value needs to be in the following format with HTTP or HTTPS as needed:

https://{DOMAIN}:{S3_PORT}

Region - The S3 region to use. Some S3 providers may not require region.

Remote Bucket - Enter the remote bucket name.

Remote Folder - An optional folder path within the remote bucket.

Access Key - An access key for the remote bucket and must be generated within the remote cloud storage service.

Secret Key - An S3 secret key. It is generated with the access key.

Azure Blob

Remote Container - Enter the Azure container name.

Remote Folder - An optional folder path within the remote container.

Authentication method can be Account and Key, or SAS URL.

The SAS URL needs permission to list in addition to other relevant file permissions.

Click Begin Copy. This button is enabled once all required text fields are filled.

The copy operation generates support objects (manifest, dictionary object, log, and result). All objects use the given job name as a prefix but are appended with separate suffixes. The duration of the job depends on the size of the job (the count of objects and the total number of bytes to be transferred). Download and open the latest copy of the status log to monitor the status of the job.

...