version 4.0 | document revision 1

| Table of Contents | ||

|---|---|---|

|

| Anchor | ||||

|---|---|---|---|---|

|

...

| Anchor | ||||

|---|---|---|---|---|

|

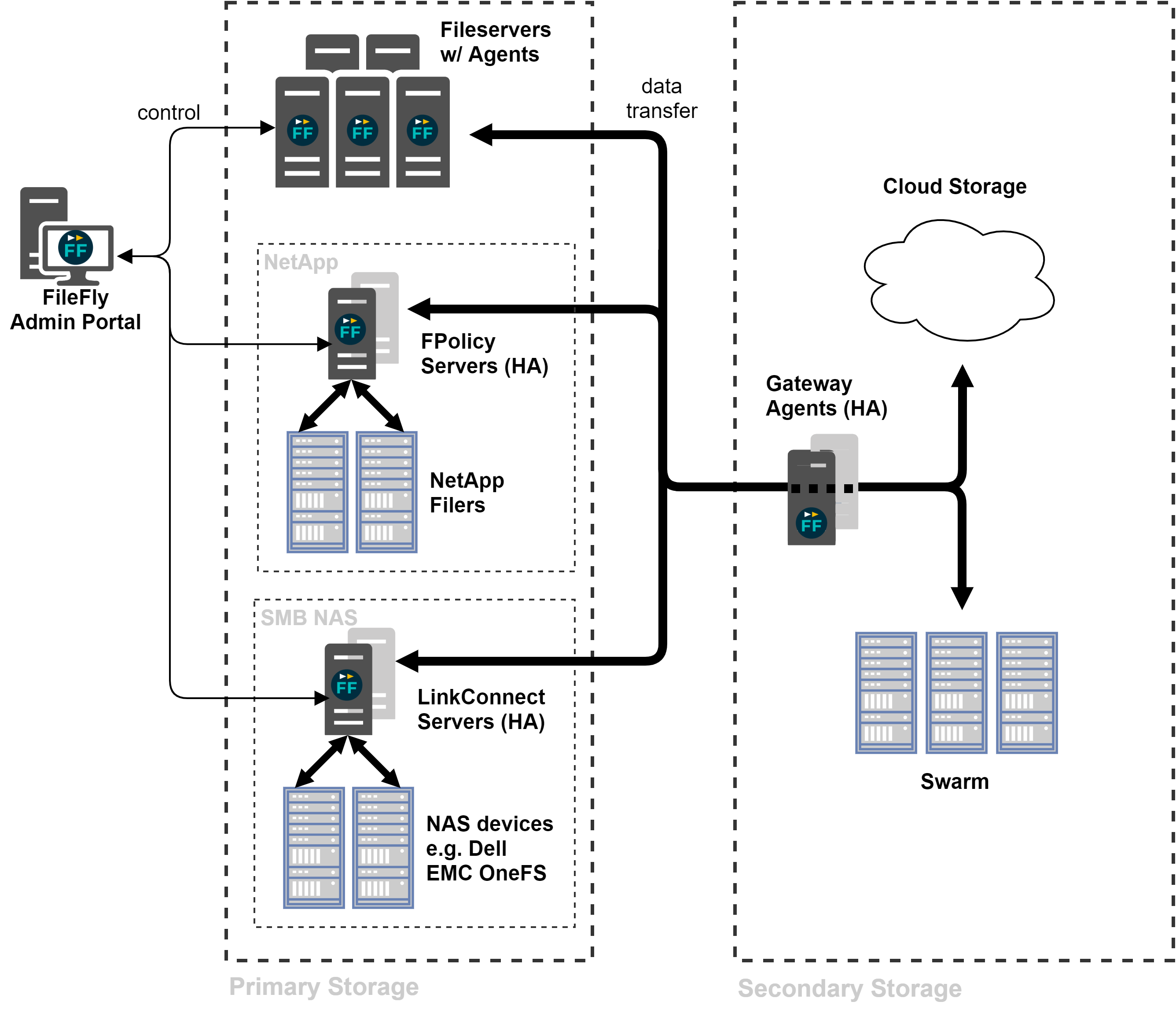

1.1 provides an overview of a FileFly deployment. All communication between FileFly components is secured with Transport Layer Security (TLS). The individual components are described below.

| Anchor | ||||

|---|---|---|---|---|

|

FileFly System Overview

...

DataCore FileFly Admin Portal is the web-based interface that provides central management of a FileFly deployment. It is installed as part of the FileFly Tools package.

When entering the FileFly Admin Portal, the 'Dashboard' will be displayed – we will come back to the dashboard in 1.5. For now, the remainder of this section will follow the Admin Portal's navigation menu.

...

The 'Servers' page displays the installed and activated agents across the deployment of FileFly. Health information and statistics are provided for each server or cluster node. You will use this page when activating the other components in your system.

Click a Server's ellipsis control to:

view additional server information

configure storage plugins

add / retire / restart cluster nodes

upgrade a standalone server to high-availability

view detailed charts of recent activity

edit server-specific configuration (see E)

| Anchor | ||||

|---|---|---|---|---|

|

Sources describe volumes or folders to which Policies may be applied (e.g., locations on the network from which files may be Migrated).

A Source location is specified by a URI. Platform-specific information for all supported sources is detailed in 5. A filesystem browser is provided to assist in setting the URI location interactively.

...

Destinations are storage locations that Policies may write files to (e.g., locations on the network to which files are Migrated). Platform-specific information for all supported sources is detailed in 5.

Optionally, a Destination may be configured to use Write Once Read Many (WORM) semantics for migration operations. No attempt will be made thereafter to update the resultant secondary storage objects. This option is useful when the underlying storage device has WORM-like behavior, but is exposed using a generic protocol.

...

Rules allow a specific subset of files within a Source or Sources to be selected for processing.

Rules can match a variety of metadata: filename / pathname, size, timestamps / age, file owner, and attribute flags. A rule matches if all of its specified criteria match the file's metadata. However, rules can be negated or compounded as necessary to perform more complex matches.

You will be able to simulate your Rules against your Sources during Policy creation.

Some criteria are specified as comma-separated lists of patterns:

wildcard patterns, e.g. *.doc (see A.1)

regular expressions, e.g. /2004-06-[0-9][0-9]\.log/ (see A.2)

Note that:

files match if any one of the patterns in the list match

whitespace before and after each pattern is ignored

patterns are case-insensitive

filename patterns starting with '/' match the path from the point specified by the Source URI

filename patterns NOT starting with '/' match files in any subtree

literal commas within a pattern must be escaped with a backslash

| Anchor | ||||

|---|---|---|---|---|

|

A Policy specifies an operation to perform on a set of files. Depending on the type of operation, a Policy will specify Source(s) and/or Destination(s), and possibly Rules to limit the Policy to a subset of files.

Each operation has different parameters, refer to 4 for a full reference.

| Anchor | ||||

|---|---|---|---|---|

|

A Task schedules one or more Policies for execution. Tasks can be scheduled to run at specific times, or can be run on-demand via the Quick Run control on the 'Dashboard'.

While a Task is running, its status is displayed in the 'Running Tasks' panel of the 'Dashboard'. When Tasks finish they are moved to the 'Recent Tasks' panel.

Operation statistics are updated in real time as the task runs. Operations will automatically be executed in parallel, see E for more details.

If multiple Tasks are scheduled to start simultaneously, Policies on each Source are grouped such that only a single traversal of each file system is required.

...

When a Task finishes running, regardless of whether it succeeds or fails, a completion notification email may be sent as a convenience to the administrator. This notification email contains summary information similar to that available in the 'Recent Tasks' panel on the 'Dashboard'.

To use this feature, either:

check the 'Notify completion' option when configuring the Task, or

click the notify icon on a running task on the 'Dashboard'

| Anchor | ||||

|---|---|---|---|---|

|

Reports – generated by Gather Statistics Policies – contain charts detailing:

a 30-day review of access and change activity

a long-term trend chart to assist with planning migration strategy

a breakdown of the most common file types

optionally, a breakdown of file ownership

| Anchor | ||||

|---|---|---|---|---|

|

The 'Recovery' page provides access to multiple versions of the recovery files produced by each Create Recovery File From Source/Destination Policy. Retention options may be adjusted in 'Settings'.

Refer to 6 for more information on performing recovery operations.

...

The FileFly Admin Portal 'Settings' page allows configuration of a wide range of global settings including:

email notification

configuration backup (see 3.3)

work hours

Admin Portal logging

user interface language selection

It is also possible to suspend the scheduler, to prevent scheduled Tasks starting while maintenance procedures are being performed.

Server-specific settings and plugin configuration are available on the 'Servers' page.

...

The 'Dashboard' provides a concise view of the FileFly system status, current activity and recent task history. It may also be used to run Tasks on-demand via the Quick Run control.

The 'Notices' panel, displayed on the expandable graph bar, summarizes system issues that need to be addressed by the administrator. For instance, this This panel will guide you through initial setup tasks such as license installation.

The circular 'Servers' display shows high-level health information for the servers / clusters in the FileFly deployment.

...

The 'Processed' line chart graphs both the rate of operations successfully performed and data processed over time. Data transfer and bytes Quick-Remigrated (i.e. without any transfer required) are shown separately.

The 'Operations' breakdown chart shows successful activity by operation type across the whole system over time. Additionally, per-server operations charts are available via the 'Servers' page – see 1.4.1.

The 'Operations' radar chart shows a visual representation of the relative operation profile across your deployment. Two figures are drawn, one for each of the two preceding 7-day periods. This allows behavioral change from week to week to be seen at a glance.

...

Refer to these instructions during initial deployment and when adding new components. For upgrade instructions, please refer to 3.7 instead.

For further information about each supported storage platform, refer to 5.

| Anchor | ||||

|---|---|---|---|---|

|

...

| Anchor | ||||

|---|---|---|---|---|

|

A dedicated server with a supported operating system:

Windows Server 2019

Windows Server 2016

Windows Server 2012 R2

Windows Server 2012

Minimum 4GB RAM

Minimum 2GB disk space for log files

Active clock synchronization (e.g. via NTP)

| Anchor | ||||

|---|---|---|---|---|

|

Run DataCore FileFly Tools.exe

Follow the instructions on screen

After completing the installation process, FileFly Tools must be configured via the Admin Portal web interface. The FileFly Admin Portal will be opened automatically and can be found later via the Start Menu.

The web interface will lead you through the process of initial configuration: refer to the 'Notices' panel on the 'Dashboard' to ensure that all steps are completed.

...

At least two FileFly Gateways are required for High-Availability.

Add each FileFly Gateway server to DNS

Create an FQDN that resolves to all of the IP addresses

Use this FQDN when activating the HA Servers

Use this FQDN (or a CNAME alias to it) in FileFly Destination URIs

Example:

gw-1.example.com → 192.168.0.1

gw-2.example.com → 192.168.0.2

gw.example.com → 192.168.0.1, 192.168.0.2

Note: The servers that form the High-Availability Gateway cluster must NOT be members of a Windows failover cluster.

...

| Anchor | ||||

|---|---|---|---|---|

|

Supported Windows Server operating system:

Windows Server 2019

Windows Server 2016

Windows Server 2012 R2

Windows Server 2012

Minimum 4GB RAM

Minimum 2GB disk space for log files

Active clock synchronization (e.g. via NTP)

Note: When installed in the Gateway role, a dedicated server is required, unless it is to be co-located on the FileFly Tools server. When co-locating, create separate DNS aliases to refer to the Gateway and the FileFly Admin Portal web interface.

| Anchor | ||||

|---|---|---|---|---|

|

Run the DataCore FileFly Agent.exe

Follow the instructions to activate the agent via FileFly Admin Portal

| Anchor | ||||

|---|---|---|---|---|

|

...

| Anchor | ||||

|---|---|---|---|---|

|

A dedicated server with a supported operating system:

Windows Server 2019

Windows Server 2016

Windows Server 2012 R2

Windows Server 2012

Minimum 4GB RAM

Minimum 2GB disk space for log files

Active clock synchronization (e.g. via NTP)

| Anchor | ||||

|---|---|---|---|---|

|

Installation of the FileFly FPolicy Server software requires careful preparation of the NetApp Filer and the FileFly FPolicy Server machines. Instructions are provided in 5.3.

| Anchor | ||||

|---|---|---|---|---|

|

...

| Anchor | ||||

|---|---|---|---|---|

|

A dedicated server with a supported operating system:

Windows Server 2019

Windows Server 2016

Minimum 2GB disk space for log files (on the system volume)

Minimum 1TB disk space for LinkConnect Cache (as a single NTFS volume)

RAM: 8GB base, plus:

4GB per TB of LinkConnect Cache

0.5GB per billion link-migrated files

Active clock synchronization (e.g. via NTP)

| Anchor | ||||

|---|---|---|---|---|

|

Installation of the FileFly LinkConnect Server software requires careful configuration of both the NAS / file server and the FileFly LinkConnect Server machines. Instructions are provided in 5.4 for OneFS and 5.2 for Windows file servers. Other devices are not supported.

...

Having deployed one or more LinkConnect Servers, all Windows clients that will need to access link-migrated files will require the LinkConnect Client Driver to be installed as follows:

Ensure the client machine is joined to the Active Directory domain

Run DataCore FileFly LinkConnect Client Driver.exe

Follow the prompts

Alternatively to ease deployment, the installer may be run in silent mode by specifying /S on the command line. Note that when upgrading the driver silently, the updated driver will not be loaded until the next reboot.

Important: Client Driver versions newer than the installed FileFly LinkConnect Server version should not be deployed.

...

Once the software has been installed, the first step in any new FileFly deployment is to analyze the characteristics of the primary storage volumes. The following steps describe how to generate file statistics reports for each volume.

In the FileFly Admin Portal web interface:

Create Sources for each volume to analyze

Create a 'Gather Statistics' Policy and select all defined Sources

Create a Task for the 'Gather Statistics' Policy

For now, disable the schedule

On the 'Dashboard', click the quick run icon

Run the Task

When the Task has finished, view the report(s) on the 'Reports' page

| Anchor | ||||

|---|---|---|---|---|

|

Using the information from the reports, create a rule to select files for migration. A typical rule might limit migrations to files modified more than six months ago. The reports' long-term trend charts will indicate the amount of data that will be migrated by a 'modified more than n months ago' rule – adjust the age cutoff as necessary to suit your filesystems.

To avoid unnecessary migration of active files, be conservative with your first Migration Rule – it can be updated to migrate more recently modified files on subsequent runs.

Once the Rule has been created:

Create a Destination to store your migrated data

see 5 for platform-specific instructions

Create a Migration Policy and add the Source(s), Rule and Destination

Use the 'Simulate rule matching…' button to explore the effect of your rule

Create a Task for the new Policy

Run the task

When the task has completed, check the corresponding 'Recent Tasks' entries on the 'Dashboard'. Click on the log icon to review any errors in detail.

Migration is typically performed periodically: configure a schedule on the Migration Task.

| Anchor | ||||

|---|---|---|---|---|

|

4 describes all FileFly Policy Operations in detail and will help you to get the most out of FileFly.

The remainder of this chapter gives guidance on using FileFly in a production environment.

...

This section describes how to backup DataCore FileFly configuration (for primary and secondary storage backup considerations, see 3.4).

| Anchor | ||||

|---|---|---|---|---|

|

...

Configuration backup can be scheduled on the Admin Portal's 'Settings' page. A default schedule is created at installation time to backup configuration once a week.

Configuration backup files include:

Policy configuration

Server registrations

Per-Server settings, including plugin configuration, keys etc.

Note: FileFly FPolicy Server configuration is not included – see 3.3.2.1

Recovery files

Settings from the Admin Portal 'Settings' page

Settings specified when FileFly Tools was installed

It is strongly recommended that these backup files are retrieved and stored securely as part of your overall backup plan. These backup files can be found at:

C:\Program Files\DataCore FileFly\data\AdminPortal\configBackups

Additionally, log files may be backed up from:

C:\Program Files\DataCore FileFly\logs\AdminPortal\

C:\Program Files\DataCore FileFly\logs\DrTool\

| Anchor | ||||

|---|---|---|---|---|

|

Ensure that the server to be restored to has the same FQDN as the original server

If present, uninstall DataCore FileFly Tools

Run the installer: DataCore FileFly Tools.exe

use the same version that was used to generate the backup file

On the 'Installation Type' page, select 'Restore from Backup'

Choose the backup zip file and follow the instructions

Optionally, log files may be restored from server backups to:

C:\Program Files\DataCore FileFly\logs\AdminPortal\

C:\Program Files\DataCore FileFly\logs\DrTool\

| Anchor | ||||

|---|---|---|---|---|

|

...

On each replacement server:

Reinstall with the same version of the installer

Stop the 'DataCore FileFly Agent' service

Restore the contents of the following directories from backup:

C:\Program Files\DataCore FileFly\data\FileFly Agent\

C:\Program Files\DataCore FileFly\logs\FileFly Agent\

Restart the 'DataCore FileFly Agent' service

| Anchor | ||||

|---|---|---|---|---|

|

...

Ensure that the restoration of stubs is included as part of your backup & restore test regimen.

When using Scrub policies, ensure the Scrub grace period is sufficient to cover the time from when a backup is taken to when the restore and Post-Restore Revalidate steps are completed (see below).

It is strongly recommended to set the global minimum grace period accordingly to guard against the accidental creation of scrub policies with insufficient grace. This setting may be configured on that FileFly Admin Portal 'Settings' page.

Important: It will NOT be possible to safely restore stubs or MigLinks from a backup set taken more than one grace period ago.

...

| Anchor | ||||

|---|---|---|---|---|

|

Suspend the scheduler in FileFly Admin Portal

Restore the primary volume

Run a 'Post-Restore Revalidate' policy against the primary volume

To ensure all stubs are revalidated, run this policy against the entire primary volume, NOT simply against the migration source

This policy is not required when only WORM destinations are in use

Restart the scheduler in FileFly Admin Portal

If restoring the primary volume to a different server (a server with a different FQDN), the following preparatory steps will also be required:

On the 'Servers' page, retire the old server (unless still in use for other volumes)

Install FileFly Agent on the new server

Update Sources as required to refer to the FQDN of the new server

Perform the restore process as above

| Anchor | ||||

|---|---|---|---|---|

|

...

Most enterprise Windows backup software will respect FileFly stubs and will back them up correctly without causing any unwanted demigrations. For some backup software, it may be necessary to refer to the software documentation for options regarding Offline files.

When testing backup software configuration, test that backup of stubs does not cause unwanted demigration.

Additional backup testing may be required if Stub Deletion Monitoring is required. Please refer to E for more details.

| Anchor | ||||

|---|---|---|---|---|

|

Please consult 5.3.5 for information regarding snapshot restore on NetApp Filers.

...

| Anchor | ||||

|---|---|---|---|---|

|

Check your FileFly configuration is adequately backed up – see 3.3

Review the storage backup and restore procedures described in 3.4

Check backup software can backup stubs without triggering demigration

Check backup software restores stubs and that they can be demigrated

Schedule regular 'Create Recovery File From Source' Policies on your migration sources – see 4.10

| Anchor | ||||

|---|---|---|---|---|

|

Generally, antivirus software will not cause demigrations during normal file access. However, some antivirus software will demigrate files when performing scheduled file system scans.

Prior to production deployment, always check that installed antivirus software does not cause unwanted demigrations. Some software must be configured to skip offline files in order to avoid these inappropriate demigrations. Consult the antivirus software documentation for further details.

If the antivirus software does not provide an option to skip offline files during a scan, DataCore FileFly Agent may be configured to deny demigration rights to the antivirus software. Refer to E for more information.

It may be necessary for some antivirus products to exempt the DataCore FileFly Agent process from real-time protection (scan-on-access). If the exclusion configuration requires the path of the executable to be specified, be sure to update the exclusion whenever FileFly is upgraded (since the path will change on upgrade).

...

Check for other applications that open all the files on the whole volume. Audit scheduled processes on file servers – if such processes cause unwanted demigration, it may be possible to block them (see E).

| Anchor | ||||

|---|---|---|---|---|

|

To facilitate proactive monitoring, it is recommended to:

Configure email notifications to monitor system health and Task activity

Enable syslog – see E

| Anchor | ||||

|---|---|---|---|---|

|

For further information on platform-specific interoperability considerations, please refer to the appropriates sections of 5.

| Anchor | ||||

|---|---|---|---|---|

|

Periodically re-assess file distribution and access behavior:

Run 'Gather Statistics' Policies

Examine reports

Examine Server statistics – see 1.4.1

For more detail, examine demigrates in file server agent.log files

Consider:

Are there unexpected peaks in demigration activity?

Are there any file types that should not be migrated?

Should different rules be applied to different file types?

Is the Migration Policy migrating data that is regularly accessed?

Are the Rules aggressive enough or too aggressive?

What is the data growth rate on primary and secondary storage?

Are there subtrees on the source file system that should be addressed by separate policies or excluded from the source entirely?

| Anchor | ||||

|---|---|---|---|---|

|

When a FileFly deployment is upgraded from a previous version, FileFly Tools must always be upgraded first, followed by all Server components.

Run:

DataCore FileFly Tools.exe

| Anchor | ||||

|---|---|---|---|---|

|

...

| Anchor | ||||

|---|---|---|---|---|

|

Run DataCore FileFly Agent.exe and follow the instructions

Resolve any warnings displayed on the 'Dashboard'

| Anchor | ||||

|---|---|---|---|---|

|

Run DataCore FileFly NetApp FPolicy Server.exe and follow the instructions

Run DataCore FileFly NetApp Cluster-mode Config.exe and follow the instructions

Resolve any warnings displayed on the 'Dashboard'

| Anchor | ||||

|---|---|---|---|---|

|

Run DataCore FileFly LinkConnect Server.exe and follow the instructions

Resolve any warnings displayed on the 'Dashboard'

| Anchor | ||||

|---|---|---|---|---|

|

...

Requires: Source(s), Rule(s), Destination Included in Community Edition: yes

Migrate file data from selected Source(s) to a Destination. Stub files remain at the Source location as placeholders until files are demigrated. File content will be transparently demigrated (returned to primary storage) when accessed by a user or application. Stub files retain the original logical size and file metadata. Files containing no data will not be migrated.

Each Migrate operation will be logged as a Migrate, Remigrate, or Quick-Remigrate.

A Remigrate is the same as a Migrate except it explicitly recognizes that a previous version of the file had been migrated in the past and that stored data pertaining to that previous version is no longer required and so is eligible for removal via a Scrub policy.

A Quick-Remigrate occurs when a file has been demigrated and NOT modified. In this case it is not necessary to retransfer the data to secondary storage so the operation can be performed very quickly. Quick-remigration does not change the secondary storage location of the migrated data.

Optionally, quick-remigration of files demigrated within a specified number of days may be prevented. This option can be used to avoid quick-remigrations occurring in an overly aggressive fashion.

Additionally, this policy may be configured to pause during the globally configured work hours.

Note: For Sources using a FileFly LinkConnect Server, such as Dell EMC OneFS shares, see 4.9 instead.

| Anchor | ||||

|---|---|---|---|---|

|

...

Requires: Source(s) Included in Community Edition: yes

Scan all stubs present on a given Source, revalidating the relationship between the stubs and the corresponding files on secondary storage. This operation is required following a restore from backup and should be performed on the root of the restored source volume.

If only Write Once Read Many (WORM) destinations are in use, this policy is not required.

Important: This revalidation operation MUST be integrated into backup/restore procedures, see 3.4.1.

| Anchor | ||||

|---|---|---|---|---|

|

...

Requires: Source(s), Rule(s) Included in Community Edition: yes

Demigrates files with advanced options:

Disconnect files from destination – remove destination information from demigrated files (both files demigrated by this policy and files that have already been demigrated); it will no longer be possible to quick-remigrate these files

A Destination Filter may optionally be specified in order to demigrate/disconnect only files that were migrated to a particular destination

Prior to running an Advanced Demigrate policy, be sure that there is sufficient primary storage available to accommodate the demigrated data.

...

Requires: Source(s), Rule(s), Destination Included in Community Edition: yes

Premigrate file data from selected Source(s) to a Destination in preparation for migration. Files on primary storage will not be converted to stubs until a Migrate or Quick-Remigrate Policy is run. Files containing no data will not be premigrated.

This can assist with:

a requirement to delay the stubbing process until secondary storage backup or replication has occurred

reduction of excessive demigrations while still allowing an aggressive Migration Policy.

Premigration is, as the name suggests, intended to be followed by full migration/quick-remigration. If this is not done, a large number of files in the premigrated state may slow down further premigration policies, as the same files are rechecked each time.

By default, files already premigrated to another destination will be skipped when encountered during a premigrate policy.

This policy may also be configured to pause during the globally configured work hours.

Note: Most deployments will not use this operation, but will use a combination of Migrate and Quick-Remigrate instead.

...

Requires: Source(s), Rule(s), Destination Included in Community Edition: yes

For platforms that do not support standard stub-based migration, Link-Migrate file data from selected Source(s) to a Destination.

Files at the source location will be replaced with FileFly-encoded links (MigLinks) which allow client applications to transparently read data without returning files to primary storage. If an application attempts to modify a link, the file will be automatically returned to primary storage and then modified in-place. Files containing no data will be skipped by this policy.

MigLinks present the original logical size and file metadata.

Since MigLinks remain links when read by client applications, there is no analogue of quick-remigration for link-migrate.

This policy may be configured to pause during the globally configured work hours.

Note: To perform link-migration to Swarm targets, the destination should use the s3generic scheme, see 5.8.

| Anchor | ||||

|---|---|---|---|---|

|

...

Requires: Destination Included in Community Edition: no

Generate a disaster recovery file for DataCore FileFly DrTool by reading the index and analyzing files at the selected Destination without reference to the associated primary storage files.

Note: Recovery files from Destination may not account for renames.

Important: It is strongly recommended to use 'Create Recovery File From Source' in preference where possible.

...

Requires: Source(s), Rule(s) Included in Community Edition: yes

Erases cached data associated with files by the Partial Demigrate feature (NetApp Sources only).

Important: The Erase Cached Data operation is not enabled by default. It must be enabled in 'Settings' → 'Additional Options'.

| Anchor | ||||

|---|---|---|---|---|

|

...

Windows NTFS volumes may be used as migration sources. On Windows Server 2016 and above, ReFS volumes are also supported as migration sources.

Windows stub files can be identified by the 'O' (Offline) attribute in Explorer. Depending on the version of Windows, files with this flag may be displayed with an overlay icon.

Note: If it is not possible to install the DataCore FileFly Agent directly on the file server, see 5.2 for an alternative solution using Link-Migration.

...

| Anchor | ||||

|---|---|---|---|---|

|

A license that includes an appropriate entitlement for Windows

When creating a production deployment plan, please refer to 3.5.

| Anchor | ||||

|---|---|---|---|---|

|

...

See Installing FileFly Agent for Windows 2.2.2

| Anchor | ||||

|---|---|---|---|---|

|

This section describes Windows-specific considerations only and should be read in conjunction with 3.5.

| Anchor | ||||

|---|---|---|---|---|

|

FileFly supports Microsoft Storage Replica.

If Storage Replica is configured for asynchronous replication, a disaster failover effectively reverts the volume to a previous point in time. As such, this kind of failover is directly equivalent to a volume restore operation (albeit to a very recent state).

As with any restore, a Post-Restore Revalidate Policy (see 4.5) should be run across the restored volume within the scrub grace period window. This will ensure correct operation of any future scrub policies by accounting for discrepancies between the demigration state of the files on the (failed) replication source volume and the replication destination volume.

Important: integrate this process into your recovery procedures prior to production deployment of asynchronous storage replication.

...

| Anchor | ||||

|---|---|---|---|---|

|

DFSR is supported for:

Windows Server 2019

Windows Server 2016

Windows Server 2012 R2

FileFly Agents must be installed (selecting the migration role during installation) on EACH member server of a DFS Replication Group prior to running migration tasks on any of the group's Replication Folders.

If adding a new member server to an existing Replication Group where FileFly is already in use, FileFly Agent must be installed on the new server first.

When running policies on a Replicated Folder, sources should be defined such that each policy acts upon only one replica. DFSR will replicate the changes to the other members as usual.

Read-only (one-way) replicated folders are NOT supported. However, read-only SMB shares can be used to prevent users from writing to a particular replica as an alternative.

Due to the way DFSR is implemented, care should be taken to avoid writing to stub files that are being concurrently accessed from another replica.

In the rare event that DFSR-replicated data is restored to a member from backup, ensure that DFSR services on all members are running and that replication is fully up-to-date (check for the DFSR 'finished initial replication' Windows Event Log message), then run a Post-Restore Revalidate Policy using the same source used for migration.

...

Retiring a replica effectively creates two independent copies of each stub, without updating secondary storage. To avoid any potential loss of data:

Delete the contents of the retired replica (preferably by formatting the disk, or at least disable Stub Deletion Monitoring during the deletion)

Run a Post-Restore Revalidate Policy on the remaining copy of the data

If it is strictly necessary to keep both, now independent, copies of the data and stubs, then run a Post-Restore Revalidate Policy on both copies separately (not concurrently).

...

The most common use of Robocopy with FileFly stubs is to preseed or stage initial synchronization. When performing such a preseeding operation:

for new Replicated Folders, ensure that the 'Primary member' is set to be the original server, not the preseeded copy

both servers must have FileFly Agent installed before preseeding

add a "Process Exclusion" to Windows Defender for robocopy.exe (allow a while for the setting to take effect)

on the source server, preseed by running robocopy with the /b flag (to copy stubs as-is to the new server)

once preseeding is complete and replication is fully up-to-date (check for the DFSR 'finished initial replication' Windows Event Log message), it is recommended to run a Post-Restore Revalidate Policy on the original FileFly Source

Note: If the process above is aborted, be sure to delete all preseeded files and stubs (preferably by formatting the disk, or at least disable Stub Deletion Monitoring during the deletion) and then run a Post-Restore Revalidate Policy on the original FileFly Source.

...

Robocopy will, by default, demigrate stubs as they are copied. This is the same behavior as Explorer copy-paste, xcopy etc..

Robocopy with the /b flag (backup mode – must be performed as an administrator) will copy stubs as-is.

Robocopy /b is not recommended. If stubs are copied in this fashion, the following must be considered:

for a copy from one server to another, both servers must have DataCore FileFly Agent installed

this operation is essentially a backup and restore in one step, and thus inappropriately duplicates stubs which are intended to be unique

after the duplication, one copy of the stubs should be deleted immediately

run a Post-Restore Revalidate policy on the remaining copy

this process will render the corresponding secondary storage files non-scrubbable, even after they are demigrated

to prevent Windows Defender triggering demigrations when the stubs are accessed in this fashion:

always run the robocopy from the source end (the file server with the stubs)

add a "Process Exclusion" to Windows Defender for robocopy.exe (allow a while for the setting to take effect)

| Anchor | ||||

|---|---|---|---|---|

|

...

Windows Shadow Copy – also known as Volume Snapshot Service (VSS) – allows previous versions of files to be restored, e.g. from Windows Explorer. This mechanism cannot be used to restore a stub. Restore stubs from backup instead – see 3.4.

| Anchor | ||||

|---|---|---|---|---|

|

...

On Windows, the FileFly Agent can monitor stub deletions to identify secondary storage files that are no longer referenced in order to maximize the usefulness of Scrub Policies. This feature extends not only to stubs that are directly deleted by the user, but also to other cases of stub file destruction such as overwriting a stub or renaming a different file over the top of a stub.

Stub Deletion Monitoring is disabled by default. To enable it, please refer to E.

| Anchor | ||||

|---|---|---|---|---|

|

...

This section details the configuration of a DataCore FileFly LinkConnect Server to enable Link-Migration of files from Windows Server SMB shares. This option should be used when it is not possible to install DataCore FileFly Agent directly on the Windows file server in question. For other cases – where FileFly Agent can be installed on the server – please refer to 5.1.

Refer to 4.2 and 4.9 for details of the Migrate and Link-Migrate operations respectively.

Link-Migration works by pairing a Windows SMB share with a corresponding LinkConnect Cache Share. Typically a top-level share on each Windows file server volume is mapped to a unique share (or subdivision) on a FileFly LinkConnect Server. Multiple file server shares may use Cache Shares / subdivisions on the same FileFly LinkConnect Server if desired.

Once this configuration is completed, Link-Migrate policies convert files on the source Windows Server SMB share to links pointing to the destination files via the LinkConnect Cache Share, according to configured rules.

Link-Migrated files can be identified by the 'O' (Offline) attribute in Explorer. Depending on the version of Windows, files with this flag may be displayed with an overlay icon.

...

| Anchor | ||||

|---|---|---|---|---|

|

An NTFS Cache Volume of at least 1TB – see 2.2.4

A FileFly license that includes an entitlement for FileFly LinkConnect Server.

A supported secondary storage destination (excluding scsp and scspdirect)

When creating a production deployment plan, please refer to 3.5.

| Anchor | ||||

|---|---|---|---|---|

|

Windows Server 2016 or higher

The server must NOT have the Active Directory Domain Services role

| Anchor | ||||

|---|---|---|---|---|

|

Windows clients require a supported 64-bit Windows operating system:

Windows 10

Windows Server 2019

Windows Server 2016

Windows Server 2012 R2

In order to access link-migrated files, the LinkConnect Client Driver must be installed on each client machine – see 2.3.

| Anchor | ||||

|---|---|---|---|---|

|

...

Similarly to the Stub Deletion Monitoring feature provided by DataCore FileFly Agents on Windows, Link Deletion Monitoring (LDM) identifies secondary storage files that are no longer referenced in order to facilitate recovery of storage space by Scrub Policies. This feature extends not only to MigLinks that are demigrated or directly deleted by the user, but also to other cases such as overwriting a MigLink or renaming a different file over the top of a MigLink.

Unlike SDM, LDM requires a number of maintenance scans to determine that a given secondary storage file is no longer referenced. It should be noted that interrupting the maintenance process (e.g. by restarting the caretaker node or transitioning the caretaker role) will delay the detection of unreferenced secondary storage. For optimal and timely storage space recovery, ensure that LinkConnect Servers can run uninterrupted for extended periods.

Warning: in order to avoid LDM incorrectly identifying files as deleted – leading to unwanted data loss during Scrub – it is critical to ensure that users cannot move/rename MigLinks out of the scanned portion of the directory tree within the filesystem. This can be achieved by always creating the share used for your 'miglinkSource' at the root of the filesystem. An additional share may be created solely for this purpose.

To utilize LDM, it must first be enabled on a per-share basis.

...

| Anchor | ||||

|---|---|---|---|---|

|

On the file server:

Add the LinkConnect User to the the local Administrators group

Add 'Full Control' permissions for this user to each share

be sure to configure the share, not the folder permissions

| Anchor | ||||

|---|---|---|---|---|

|

On each FileFly LinkConnect Server machine:

Add the user created above to the local 'Administrators' group

Assign the 'Log on as a service' privilege to this user

Run the DataCore FileFly LinkConnect Server.exe

Follow the prompts to complete the installation

Follow the instructions to activate the installation

the Servers page will report that the server is unconfigured

| Anchor | ||||

|---|---|---|---|---|

|

On your cache volume (e.g. X:), navigate to X:\1bf8ce99-8c8a-4092-9c98-2b9c850c57a1\shares.

To create each Cache Share:

Create a new folder with the desired share name

Right click → Properties → Sharing → Advanced Sharing…

Tick 'Share this folder'

Share name must match the folder name exactly (including case)

Permissions:

Everyone: Allow 'Read' only

No other permissions

| Anchor | ||||

|---|---|---|---|---|

|

On the Admin Portal 'Servers' page, edit the configuration of the FileFly LinkConnect Server. In the 'Manual Overrides' panel, add the following options:

linkconnect.config.linkConnectAlias=ALIAS_FQDN |

where ALIAS_FQDN is either the FQDN of the FileFly LinkConnect Server (standalone mode), or of the DFSN domain (standalone or high-availability).

For each share mapping, add:

linkconnect.config.MAPPING_NUMBER.miglinkSourceType=win |

linkconnect.config.MAPPING_NUMBER.miglinkSource=WIN_FQDN/WIN_SHARE |

linkconnect.config.MAPPING_NUMBER.linkConnectTarget=CACHE_SHARE\SUBDIV |

linkconnect.config.MAPPING_NUMBER.key=SECRET_KEY |

linkconnect.config.MAPPING_NUMBER.linkDeletionMonitoring.enabled=<bool> |

where:

miglinkSourceType must be set to exactly win

MAPPING_NUMBER starts at 0 for the first share mapping in this file – mappings must be numbered consecutively

WIN_FQDN/WIN_SHARE describes the file server share to be mapped

CACHE_SHARE is a LinkConnect Cache Share name (created above)

this value is CASE-SENSITIVE

SUBDIV must be the single decimal digit 1

SECRET_KEY is at least 40 ASCII characters – this key protects against counterfeit link creation

recommendation: use a password generator with 64 'All Chars'

linkDeletionMonitoring.enabled may be set to true or false to enable/disable Link Deletion Monitoring on this share – see warning above

If clients will access the storage via nested sub-shares rather than only the top-level configured MigLink Source share, the known sub-shares should be added as follows:

linkconnect.config.MAPPING_NUMBER.knownSubShares=share1,share2 |

This list of sub-shares can be updated later as more subdirectories are shared. Where MigLink access occurs on unexpected shares, warnings will be written to the LinkConnect agent.log.

Save the configuration and restart the DataCore FileFly Agent service.

Important: Refer to 3.3.1 to to ensure that the configuration on this FileFly LinkConnect Server is included in your backup. If the FileFly LinkConnect Server needs to be rebuilt, the secret key will be required to enable previously link-migrated files to be securely accessed.

...

If DFSN is to be used (even if not yet using HA), namespaces and folders must be configured as follows:

Add a DFSN namespace:

the namespace must not be hosted on a LinkConnect node

the namespace name must match the LinkConnect Cache Share name exactly (including case)

the namespace must be 'Domain-based'

Add a folder to the namespace:

folder name must be of the form: SUBDIV_MwClC_1 e.g. 1_MwClC_1

Add folder target:

\\NODE\CACHE_SHARE\SUBDIV_MwClC_1

where NODE is a LinkConnect node which exports CACHE_SHARE

where CACHE_SHARE matches the namespace name exactly (including case)

where SUBDIV_MwClC_1 matches the new folder name exactly (including case)

the folder target will already exist – it was created by the FileFly LinkConnect Server in the previous section

DO NOT enable replication

For HA configurations, add additional targets to the same folder for the remaining LinkConnect node(s)

For example, \\example.com\CacheA\1_MwClC_1 may refer to both of the following locations:

\\server1.example.com\CacheA\1_MwClC_1 | |

\\server2.example.com\CacheA\1_MwClC_1 | (optional 2nd node) |

| Anchor | ||||

|---|---|---|---|---|

|

The LinkConnect configuration, including the secret key, for each FileFly LinkConnect Server will be synchronized with the FileFly Admin Portal. These details will be part of your Admin Portal configuration backup.

However, in rare cases where the keys have been completely lost and a DataCore FileFly LinkConnect Server needs to be rebuilt, it is possible to temporarily disable the Counterfeit Link Protection (CLP) and re-sign all links with a new key. To enable this behavior, recreate the configuration as above (with a new secret key), and add a line similar to the following:

linkconnect.config.disableSignatureSecurityUntil=2020-04-14T01:00:00Z |

Regular scanning of the configured share mapping will update the links present in all scanned links to use the new key, and any user-generated access to these links will function without verifying the signatures until the configured cutoff time, specified as Zulu Time (GMT). For a large system, it may be necessary to allow several days before the cutoff, to enable key update to complete. Users may continue to access the system during this period.

...

smb://{server}/{nas}/{share}/[{path}/]

Where:

server – FQDN of a FileFly LinkConnect Server that is configured to support the file server share

nas – Windows file server FQDN

share – Windows file server SMB share

path – path within the share

Example:

smb://link.example.com/winserver.example.com/pub/projects/

...

| Anchor | ||||

|---|---|---|---|---|

|

NetApp Filer(s) must be licensed for the particular protocol(s) to be used (FPolicy requires an SMB license)

A FileFly license that includes an entitlement for FileFly NetApp FPolicy Server

DataCore FileFly FPolicy Servers require EXCLUSIVE use of SMB connections to their associated NetApp Vservers. This means Explorer windows must not be opened, drives must not be mapped, nor should any UNC paths to the filer be accessed from the FileFly FPolicy Server machine. Failure to observe this restriction will result in unpredictable FPolicy disconnections and interrupted service.

When creating a production deployment plan, please refer to 3.5.

| Anchor | ||||

|---|---|---|---|---|

|

DataCore FileFly FPolicy Server requires that the Filer is running:

Data ONTAP version 9.x

| Anchor | ||||

|---|---|---|---|---|

|

...

Before starting the installation the following parameters must be considered:

Management Interface IP Address: the address for management access to the Vserver (not to be confused with cluster or node management addresses)

SMB Privileged User: a domain user for the exclusive use of FPolicy

| Anchor | ||||

|---|---|---|---|---|

|

For each Vserver, ensure that 'Management Access' is allowed for at least one network interface. Check the network interface in OnCommand System Manager –- if Management Access is not enabled, create a new interface just for Management Access. Note that using the same interface for management and data access may cause firewall problems.

Management authentication may be configured to use either passwords or client certificates. Management connections may be secured via TLS – this is mandatory when using certificate-based authentication.

For password-based authentication:

Open a command line session to the cluster management address

Add a user for Application 'ontapi' with Role 'vsadmin'

security login create -user-or-group-name <username> -application ontapi -authentication-method password -role vsadmin -vserver <vserver fqdn>

Record the username and password for later use on the 'Management' tab in DataCore FileFly NetApp Cluster-mode Config

Alternatively, for certificate-based authentication:

Create a client certificate with common name <Username>

Open a command line session to the cluster management address

Upload the CA Certificate (or the client certificate itself if self-signed):

security certificate install -type client-ca -vserver <vserver-name>

Paste the contents of the CA Certificate at the prompt

Add a user for Application 'ontapi' with Role 'vsadmin'

security login create -username <Username> -application ontapi -authmethod cert -role vsadmin -vserver <vserver-name>

| Anchor | ||||

|---|---|---|---|---|

|

If it has not already been created, create the SMB Privileged User on the domain. Each FileFly FPolicy Server will use the same SMB Privileged User for all Vservers that it will manage.

Open a command line session to the cluster management address:

Create a new local 'Windows' group

cifs users-and-groups local-group create -group-name <Name> -vserver <vserver fqdn>

Assign ALL available privileges to the local group

cifs users-and-groups privilege add-privilege -user-or-group-name <Group Name> -privileges SeTcbPrivilege SeBackupPrivilege SeRestorePrivilege SeTakeOwnershipPrivilege SeSecurityPrivilege SeChangeNotifyPrivilege -vserver <vserver fqdn>

Add the CIFS Privileged User to this group

cifs users-and-groups local-group add-members -group-name <Name> -member-names <Domain\User or Group Name> -vserver <vserver fqdn>

Allow a few minutes for the change to take effect (or FPolicy Server operations may fail with access denied errors)

| Anchor | ||||

|---|---|---|---|---|

|

On each FileFly FPolicy Server machine:

Close any SMB sessions open to Vserver(s) before proceeding

Ensure the SMB Privileged User has the 'Log on as a service' privilege

Run the DataCore FileFly NetApp FPolicy Server.exe

Follow the prompts to complete the installation

Follow the instructions to activate the installation

| Anchor | ||||

|---|---|---|---|---|

|

Run the installer: DataCore FileFly NetApp Cluster-mode Config.exe

| Anchor | ||||

|---|---|---|---|---|

|

Run DataCore FileFly NetApp Cluster-mode Config.

On the 'FPolicy Config' tab:

Enter the FQDN used to register the FileFly FPolicy Server(s) in FileFly Admin Portal

Enter the SMB Privileged User

On the 'Management' tab:

Provide the credentials for management access (see above)

On the 'Vservers' tab:

Click Add…

Enter the SMB and management interface details

If using TLS for Management, click Get Server CA

Click Apply to Filer

Once configuration is complete, click Save.

| Anchor | ||||

|---|---|---|---|---|

|

Ensure the netapp_clustered.cfg file has been copied to the correct location on all FileFly FPolicy Server machines

C:\Program Files\DataCore FileFly\data\FileFly Agent\netapp_clustered.cfg

Restart the DataCore FileFly Agent service on each machine

| Anchor | ||||

|---|---|---|---|---|

|

SMB shares that will be used in FileFly Policies must be configured to Hide symbolic links. If a different setting is required for other SMB clients, create a new share at the same location just for FileFly traversal that does hide links. To modify the symlink behavior on a share:

Open a command line session to the cluster management address

For each share:

cifs share modify -share-name <sharename> -symlink-properties hide -vserver <vserver fqdn>

| Anchor | ||||

|---|---|---|---|---|

|

netapp://{FPolicy Server}/{NetApp Vserver}/{SMB Share}/[{path}/]

Where:

FPolicy Server – FQDN alias that points to all FileFly FileFly FPolicy Servers for the given Vserver

NetApp Vserver – FQDN of the Vserver's Data Access interface

SMB Share – NetApp SMB share name

Example:

netapp://fpol-svrs.example.com/vs1.example.com/data/

...

After an entire volume containing stubs is restored from snapshot, a Post-Restore Revalidate Policy must be run, as per the restore procedure described in 3.4.

| Anchor | ||||

|---|---|---|---|---|

|

Users cannot perform self-service restoration of stubs. However, an administrator may restore specific stubs or sets of stubs from snapshots by following the procedure outlined below. Be sure to provide this procedure to all administrators.

IMPORTANT: The following instructions mandate the use of Robocopy specifically. Other tools, such as Windows Explorer copy or the 'Restore' function in the Previous versions dialog, WILL NOT correctly restore stubs.

To restore one or more stubs from a snapshot-folder like:

\\<filer>\<share>~snapshot\<snapshot-name>\<path>

to a restore folder on the same Filer like:

\\<filer>\<share>\<restore-path>

perform the following steps:

Go to an FileFly FPolicy Server machine

Open a command window

robocopy <snapshot-folder> <folder> [<filename>…] [/b]

On a client machine (NOT the FileFly FPolicy Server), open all of the restored file(s) or demigrate them using a Demigrate Policy

Check that the file(s) have demigrated correctly

IMPORTANT: Until the demigration above is performed, the restored stub(s) may occupy space for the full size of the file.

As with any other FileFly restore procedure, be sure to run a Post-Restore Revalidate Policy across the volume before the next Scrub – see 3.4.

| Anchor | ||||

|---|---|---|---|---|

|

...

Except when following the procedure in 5.3.5 Robocopy must not be used with the /b (backup mode) switch when copying FileFly NetApp stubs.

When in backup mode, robocopy attempts to copy stub files as-is rather than demigrating them as they are read. This behavior is not supported.

Note: The /b switch requires Administrator privilege – it is not available to normal users.

...

| Anchor | ||||

|---|---|---|---|---|

|

Open a command line session to the cluster management address

security login show -vserver <vserver-name>

There should be an entry for the expected user for application 'ontapi' with role 'vsadmin'

| Anchor | ||||

|---|---|---|---|---|

|

Open a command line session to the cluster management address

vserver context -vserver <vserver-name>

security certificate show

There should be a 'server' certificate for the Vserver management FQDN (NOT the bare hostname)

If using certificate-based authentication, there should be a 'client-ca' entry

security ssl show

There should be an enabled entry for the Vserver management FQDN (NOT the bare hostname)

| Anchor | ||||

|---|---|---|---|---|

|

Vserver configuration can be validated using DataCore FileFly NetApp Cluster-mode Config.

Open the netapp_clustered.cfg in FileFly NetApp Cluster-mode Config

Go to the 'Vservers' tab

Select a Vserver

Click Edit…

Click Verify

| Anchor | ||||

|---|---|---|---|---|

|

If the FileFly FPolicy Server reports privileged share not found, there is a misconfiguration or SMB issue. Please attempt the following steps:

Check all configuration using troubleshooting steps described above

Ensure the FileFly FPolicy Server has no other SMB sessions to Vservers

run net use from Windows Command Prompt

remove all mapped drives

Reboot the server

Retry the failed operation

Check for new errors in agent.log

| Anchor | ||||

|---|---|---|---|---|

|

...

OneFS does not provide an interface for performing FileFly stub-based migration. As an alternative, FileFly provides a link-based migration mechanism via a FileFly LinkConnect Server. See 4.9 for details of the Link-Migrate operation.

Link-Migration works by pairing a OneFS SMB share with a corresponding LinkConnect Cache Share. Typically a top-level share on each OneFS device is mapped to a unique share (or subdivision) on a FileFly LinkConnect Server. Multiple OneFS systems may use shares/subdivisions on the same FileFly LinkConnect Server if desired.

Once this configuration is completed, Link-Migrate policies convert files on the source OneFS share to links pointing to the destination files via the LinkConnect Cache Share, according to configured rules.

Link-Migrated files can be identified by the 'O' (Offline) attribute in Explorer. Depending on the version of Windows, files with this flag may be displayed with an overlay icon.

...

| Anchor | ||||

|---|---|---|---|---|

|

An NTFS Cache Volume of at least 1TB – see 2.2.4

A FileFly license that includes an entitlement for FileFly LinkConnect Server.

A supported secondary storage destination (excluding scsp and scspdirect)

When creating a production deployment plan, please refer to 3.5.

| Anchor | ||||

|---|---|---|---|---|

|

DataCore FileFly LinkConnect Server requires OneFS version 8.1.2.0 or higher

| Anchor | ||||

|---|---|---|---|---|

|

Windows clients require a supported 64-bit Windows operating system:

Windows 10

Windows Server 2019

Windows Server 2016

Windows Server 2012 R2

In order to access link-migrated files, the LinkConnect Client Driver must be installed on each client machine – see 2.3.

| Anchor | ||||

|---|---|---|---|---|

|

...

Similarly to the Stub Deletion Monitoring feature provided by DataCore FileFly Agents on Windows, Link Deletion Monitoring (LDM) identifies secondary storage files that are no longer referenced in order to facilitate recovery of storage space by Scrub Policies. This feature extends not only to MigLinks that are demigrated or directly deleted by the user, but also to other cases such as overwriting a MigLink or renaming a different file over the top of a MigLink.

Unlike SDM, LDM requires a number of maintenance scans to determine that a given secondary storage file is no longer referenced. It should be noted that interrupting the maintenance process (e.g. by restarting the caretaker node or transitioning the caretaker role) will delay the detection of unreferenced secondary storage. For optimal and timely storage space recovery, ensure that LinkConnect Servers can run uninterrupted for extended periods.

Warning: in order to avoid LDM incorrectly identifying files as deleted – leading to unwanted data loss during Scrub – it is critical to ensure that users cannot move/rename MigLinks out of the scanned portion of the directory tree within the filesystem. This can be achieved by always creating the share used for your 'miglinkSource' at the root of the filesystem. An additional share may be created solely for this purpose.

To utilize LDM, it must first be enabled on a per-share basis.

...

Using the OneFS Storage Administration web console:

Navigate to Access → Membership & Roles → Roles

Edit the BackupAdmin role

add the LinkConnect user to this role

Navigate to Protocols → Windows Sharing (SMB) → SMB Shares

Edit the share to be paired with a LinkConnect Cache Share

Add the LinkConnect user as a new member

Specify 'Run as root' permission

Move the new member to the top of the members list

| Anchor | ||||

|---|---|---|---|---|

|

On each FileFly LinkConnect Server machine:

Add the user created above to the local 'Administrators' group

Assign the 'Log on as a service' privilege to this user

Run the DataCore FileFly LinkConnect Server.exe

Follow the prompts to complete the installation

Follow the instructions to activate the installation

the Servers page will report that the server is unconfigured

| Anchor | ||||

|---|---|---|---|---|

|

On your cache volume (e.g. X:), navigate to X:\1bf8ce99-8c8a-4092-9c98-2b9c850c57a1\shares.

To create each Cache Share:

Create a new folder with the desired share name

Right click → Properties → Sharing → Advanced Sharing…

Tick 'Share this folder'

Share name must match the folder name exactly (including case)

Permissions:

Everyone: Allow 'Read' only

No other permissions

| Anchor | ||||

|---|---|---|---|---|

|

On the Admin Portal 'Servers' page, edit the configuration of the FileFly LinkConnect Server. In the 'Manual Overrides' panel, add the following options:

linkconnect.config.linkConnectAlias=ALIAS_FQDN |

where ALIAS_FQDN is either the FQDN of the FileFly LinkConnect Server (standalone mode), or of the DFSN domain (standalone or high-availability).

For each share mapping, add:

linkconnect.config.MAPPING_NUMBER.miglinkSourceType=isilon |

linkconnect.config.MAPPING_NUMBER.miglinkSource=ONEFS_FQDN/ONEFS_SHARE |

linkconnect.config.MAPPING_NUMBER.linkConnectTarget=CACHE_SHARE\SUBDIV |

linkconnect.config.MAPPING_NUMBER.key=SECRET_KEY |

linkconnect.config.MAPPING_NUMBER.linkDeletionMonitoring.enabled=<bool> |

where:

miglinkSourceType must be set to exactly isilon

MAPPING_NUMBER starts at 0 for the first share mapping in this file – mappings must be numbered consecutively

ONEFS_FQDN/ONEFS_SHARE describes the OneFS share to be mapped

CACHE_SHARE is a LinkConnect Cache Share name (created above)

this value is CASE-SENSITIVE

SUBDIV must be the single decimal digit 1

SECRET_KEY is at least 40 ASCII characters – this key protects against counterfeit link creation

recommendation: use a password generator with 64 'All Chars'

linkDeletionMonitoring.enabled may be set to true or false to enable/disable Link Deletion Monitoring on this share – see warning above

If clients will access the storage via nested sub-shares rather than only the top-level configured MigLink Source share, the known sub-shares should be added as follows:

linkconnect.config.MAPPING_NUMBER.knownSubShares=share1,share2 |

This list of sub-shares can be updated later as more subdirectories are shared. Where MigLink access occurs on unexpected shares, warnings will be written to the LinkConnect agent.log.

Save the configuration and restart the DataCore FileFly Agent service.

Important: Refer to 3.3.1 to to ensure that the configuration on this FileFly LinkConnect Server is included in your backup. If the FileFly LinkConnect Server needs to be rebuilt, the secret key will be required to enable previously link-migrated files to be securely accessed.

...

If DFSN is to be used (even if not yet using HA), namespaces and folders must be configured as follows:

Add a DFSN namespace:

the namespace must not be hosted on a LinkConnect node

the namespace name must match the LinkConnect Cache Share name exactly (including case)

the namespace must be 'Domain-based'

Add a folder to the namespace:

folder name must be of the form: SUBDIV_MwClC_1 e.g. 1_MwClC_1

Add folder target:

\\NODE\CACHE_SHARE\SUBDIV_MwClC_1

where NODE is a LinkConnect node which exports CACHE_SHARE

where CACHE_SHARE matches the namespace name exactly (including case)

where SUBDIV_MwClC_1 matches the new folder name exactly (including case)

the folder target will already exist – it was created by the FileFly LinkConnect Server in the previous section

DO NOT enable replication

For HA configurations, add additional targets to the same folder for the remaining LinkConnect node(s)

For example, \\example.com\CacheA\1_MwClC_1 may refer to both of the following locations:

\\server1.example.com\CacheA\1_MwClC_1 | |

\\server2.example.com\CacheA\1_MwClC_1 | (optional 2nd node) |

| Anchor | ||||

|---|---|---|---|---|

|

The LinkConnect configuration, including the secret key, for each FileFly LinkConnect Server will be synchronized with the FileFly Admin Portal. These details will be part of your Admin Portal configuration backup.

However, in rare cases where the keys have been completely lost and a DataCore FileFly LinkConnect Server needs to be rebuilt, it is possible to temporarily disable the Counterfeit Link Protection (CLP) and re-sign all links with a new key. To enable this behavior, recreate the configuration as above (with a new secret key), and add a line similar to the following:

linkconnect.config.disableSignatureSecurityUntil=2020-04-14T01:00:00Z |

Regular scanning of the configured share mapping will update the links present in all scanned links to use the new key, and any user-generated access to these links will function without verifying the signatures until the configured cutoff time, specified as Zulu Time (GMT). For a large system, it may be necessary to allow several days before the cutoff, to enable key update to complete. Users may continue to access the system during this period.

...

smb://{server}/{nas}/{share}/[{path}/]

Where:

server – FQDN of a FileFly LinkConnect Server that is configured to support the OneFS share

nas – OneFS FQDN

share – OneFS SMB share

path – path within the share

Example:

smb://link.example.com/onefs.example.com/pub/projects/

...

Before proceeding with the installation, the following will be required:

Cloud Gateway 3.0.0 or above

Swarm 8 or above

a license that includes an entitlement for Swarm

| Anchor | ||||

|---|---|---|---|---|

|

The following Policy limitations apply to this scheme:

it may not be used as a Link-Migration destination

it may not be used as the new destination for Change Destination Tier policies

it may not be used as the new destination for Retarget Destination policies

| Anchor | ||||

|---|---|---|---|---|

|

The TCP port used to access the Swarm Content Gateway via HTTP or HTTPS must be allowed by any firewalls between the DataCore FileFly Gateway and the Swarm endpoint. For further information regarding firewall configuration see 8.

| Anchor | ||||

|---|---|---|---|---|

|

...

Enable 'Include metadata headers' to store per-file HTTP metadata with the destination objects, such as original filename and location, content-type, owner and timestamps – see 5.5.6 for details.

Also enable 'Include Content-Disposition' to include original filename for use when downloading the target objects directly using a web browser.

...

During migration, each newly migrated file is recorded in the corresponding index. The index may be used in disaster scenarios where:

stubs have been lost, and

a Create Recovery File from Source file is not available, and

no current backup of the stubs exists

Index performance is optimized for migrations and demigrations, not for Create Recovery File from Destination policies.

Create Recovery File from Source policies are the recommended means to obtain a Recovery file for restoring stubs. This method provides better performance and the most up-to-date stub location information.

It is recommended to regularly run Create Recovery File from Source policies following Migration policies.

...

The following metadata fields are supported:

X-Alt-Meta-Name – the original source file's filename (excluding directory path)

X-Alt-Meta-Path – the original source file's directory path (excluding the filename) in a platform-independent manner such that '/' is used as the path separator and the path will start with '/', followed by drive/volume/share if appropriate, but not end with '/' (unless this path represents the root directory)

X-FileFly-Meta-Partition – the Destination URI partition – if no partition is present, this header is omitted

X-Source-Meta-Host – the FQDN of the original source file's server

X-Source-Meta-Owner – the owner of the original source file in a format appropriate to the source system (e.g. DOMAIN\username)

X-Source-Meta-Modified – the Last Modified timestamp of the original source file at the time of migration in RFC3339 format

X-Source-Meta-Created – the Created timestamp of the original source file in RFC3339 format

X-Source-Meta-Attribs – a case-sensitive sequence of characters {AHRS} representing the original source file's file flags: Archive, Hidden, Read-Only and System

all other characters are reserved for future use and should be ignored

Content-Type – the MIME Type of the content, determined based on the file-extension of the original source filename

Note: Timestamps may be omitted if the source file timestamps are not set.

Non-ASCII characters will be be stored using RFC2047 encoding, as described in the Swarm documentation. Swarm will decode these values prior to indexing in Elasticsearch.

...

The scspdirect scheme should only be used when accessing Swarm storage nodes directly. Swarm may be used as a migration destination only.

Swarm (SCSP) traffic is not encrypted in transit when using this scheme. Optionally, the plugin can employ client-side encryption to protect migrated data at rest.

Normally, Swarm will be accessed via a Swarm Content Gateway, in which case the scsp scheme must be used instead, see 5.5.

| Anchor | ||||

|---|---|---|---|---|

|

Before proceeding with the installation, the following will be required:

Swarm 8 or above

a license that includes an entitlement for Swarm

| Anchor | ||||

|---|---|---|---|---|

|

The following Policy limitations apply to this scheme:

it may not be used as a Link-Migration destination

it may not be used as the new destination for Change Destination Tier policies

it may not be used as the new destination for Retarget Destination policies

| Anchor | ||||

|---|---|---|---|---|

|

The Swarm storage node port must be allowed by any firewalls between the DataCore FileFly Gateway and the Swarm storage nodes. For further information regarding firewall configuration see B.

| Anchor | ||||

|---|---|---|---|---|

|

...

Enable 'Include metadata headers' to store per-file HTTP metadata with the destination objects, such as original filename and location, content-type, owner and timestamps – see 5.5.6 for details.

Also enable 'Include Content-Disposition' to include original filename for use when downloading the target objects directly using a web browser.

...

| Anchor | ||||

|---|---|---|---|---|

|

Refer to 5.5.5.

| Anchor | ||||

|---|---|---|---|---|

|

...

Before proceeding with the installation, the following will be required:

an Amazon Web Services (AWS) Account

a license that includes an entitlement for Amazon S3

Dedicated buckets – without versioning enabled – should be used for FileFly migration data. However, do not create any S3 buckets at this stage.

...

Extended metadata fields are written when the 'Migrate with original filenames' option is selected for a migration destination bucket.

Header Field | Content |

x-amz-meta-orig-host | Source server FQDN |

x-amz-meta-orig-name | Original filename (without path) |

x-amz-meta-orig-modified-time | Modified timestamp |

x-amz-meta-orig-created-time | Creation timestamp |

x-amz-meta-orig-attribs | Subset of characters {AHRS} |

representing the original source file's flags | |

Content-Disposition (optional) | Original name for web browser download |

Security Details | as appropriate |

x-amz-meta-orig-owner | File owner – e.g. Domain\JoeUser |

x-amz-meta-orig-sddl | Microsoft SDDL format security descriptor |

Notes:

headers will be sent in UTF-8 using RFC2047 encoding as necessary to unambiguously represent the original metadata values (in accordance with the HTTP/1.1 specification – see RFC2616/2.2)

due to Amazon-specific limitations, sequences of adjacent whitespace within x-amz-meta-orig-name may be returned as a single space by some client software

all timestamps are stored as UTC in RFC3339 format

| Anchor | ||||

|---|---|---|---|---|

|

...

Important: Prior to production deployment, please confirm with DataCore that the chosen device or service has been certified for compatibility to ensure that it will be covered by your support agreement.

Prerequisites:

suitable S3 API credentials

a license that includes an entitlement for generic S3 endpoints

Dedicated buckets – without versioning enabled – should be used for FileFly migration data. However, do not create any S3 buckets at this stage.

...

The S3 protocol supports a virtual-host-style bucket access method, for example https://bucket.s3.example.com rather than only https://s3.example.com/bucket. This facilitates connecting to a node in the correct region for the bucket, rather than requiring a redirect.

Generally the 'Use Virtual Host Access' option should be enabled (the default) to ensure optimal performance and correct operation. However, if the generic S3 endpoint in question does not support this feature at all, Virtual Host Access may be disabled.

Note: When using Virtual Host Access in conjunction with HTTPS (recommended) it is important to ensure that the endpoint's TLS certificate has been created correctly. For example, if the endpoint FQDN is s3.example.com, the certificate must contain Subject Alternative Names (SANs) for both s3.example.com and *.s3.example.com.

...

| Anchor | ||||

|---|---|---|---|---|

|

Please refer to 5.7.4 for S3 metadata field details.

...

Before proceeding with the installation, the following will be required:

a Microsoft Azure Account

a Storage Account within Azure – both General Purpose and Blob Storage (with Hot and Cool access tiers) account types are supported

a FileFly license that includes an entitlement for Microsoft Azure

| Anchor | ||||

|---|---|---|---|---|

|

...

Before proceeding with the installation, the following will be required:

a Google Account

a FileFly license that includes an entitlement for Google Cloud Storage

| Anchor | ||||

|---|---|---|---|---|

|

...

Using the Google Cloud Platform web console, create a new Service Account in the desired project for the exclusive use of FileFly. Create a P12 format private key for this Service Account. Record the Service Account ID and store the downloaded private key file securely for use in later steps.

Create a Storage Bucket exclusively for FileFly data.

For FileFly use, bucket names must:

be 3-40 characters long

contain only lowercase letters, numbers and dashes (-)

not begin or end with a dash

not contain adjacent dashes

Edit the bucket's permissions to add the new Service Account as a member with the 'Storage Object Admin' role.

...

The FileFly DrTool application allows for the recovery of files where normal backup and restore procedures have failed. Storage backup recommendations and considerations are covered in 3.4.

It is recommended to regularly run a 'Create Recovery File From Source' Policy to generate an up-to-date list of source–destination mappings.

FileFly DrTool is installed as part of DataCore FileFly Tools.

Note: Community Edition licenses do not include FileFly DrTool functionality.

...

Recovery files are normally generated by running 'Create Recovery File From Source' Policies in FileFly Admin Portal. To open a file previously generated by FileFly Admin Portal:

Open DataCore FileFly DrTool from the Start Menu

Go to File → Open From FileFly Admin Portal…→ Recovery File From Source

Select a Recovery file to open

Older versions of Recovery files may be found via the 'Recovery' page in FileFly Admin Portal.

...

In the 'Scheme Pattern' field, use the name of the Scheme only (e.g. win, not win:// or win://servername ). This field may be left blank to return results for all schemes.

This field matches against the scheme section of a URI:

{scheme}://{servername}/[{path}]

| Anchor | ||||

|---|---|---|---|---|

|

In the 'Server Pattern' field, use the full server name or a wildcard expression.

This field matches against the servername section of a URI:

{scheme}://{servername}/[{path}]

Examples:

server65.example.com – will match only the specified server

*.finance.example.com – will match all servers in the 'finance' subdomain

| Anchor | ||||

|---|---|---|---|---|

|

The 'File Pattern' field will match either filenames only (and search within all directories), or filenames qualified with directory paths in the same manner as filename patterns in FileFly Admin Portal Rules – see A.

For the purposes of file pattern matching, the top-level directory is considered to be the top level of the entire URI path. This may be different to the top-level of the original Source URI.

...

Analyze assists in creating simple filters.

Click Analyze

Analyze will display a breakdown by scheme, server and file type

Select a subset of the results by making a selection in each column

Click Filter to create a filter based on the selection

| Anchor | ||||

|---|---|---|---|---|

|

...

To recover files interactively:

Select the results for which files will be recovered

Click Edit → Recover File…

| Anchor | ||||

|---|---|---|---|---|

|

All files may be recovered either as a batch process using the command line (see 6.7) or interactively as follows:

Click Edit → Recover All Files…

Note: Missing folders will be recreated as required to contain the recovered files. However, these folders will not have ACLs applied to them so care should be taken when recreating folder structures in sensitive areas.

...

When recovering to a new location, always use an up-to-date Recovery file generated by a 'Create Recovery File From Source' Policy.

To rewrite source file URIs to the new location, use the -csu command line option to update the prefix of each URI. Once these URI substitutions have been applied (and checked in the GUI) files may be recovered as previously outlined. The -csu option is further detailed in 6.7.

Important: DO NOT create stubs in a new location and then continue to use the old location. To avoid incorrect reference counts, only one set of stubs should exist at any given time.

...

In FileFly DrTool, source files may be updated to reflect a destination URI change through use of the -cmu command line option – detailed in 6.7.